Interview: Albert Yeap, Professor of Artificial Intelligence, on ‘Westworld’

HBO’s ambitious new flagship show Westworld – a drama about a futuristic amusement park populated by artificial beings, or ‘hosts’ – is now a few episodes into its first season. Lucky for us, it’s available to stream on NEON each week on the same day each episode airs in the US.

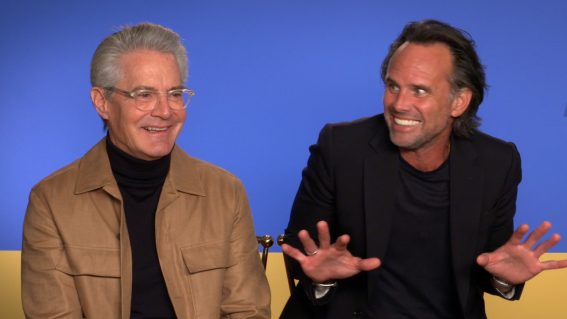

Already we’ve seen how showrunners Jonathan Nolan and Lisa Joy enjoy teasing viewers into theorising what’s going on and then serve up about turns each week. Letting the show bed in a little has allowed more of the robotic hosts’ behaviour to come to light, and so, five episodes in, we spoke with AUT Professor Wai (Albert) Yeap about the show’s depiction of artificial intelligence.

As we’ve seen in Person of Interest [also on NEON], it’s an area close to Nolan’s heart. So how’s it stacking up for Yeap, Professor of Artificial Intelligence and Director of the Centre for AI Research at AUT?

YES, SPOILERS FOLLOW

FLICKS: Keeping in mind that we don’t yet know exactly when and where ‘Westworld’ is actually set, how scientifically sound is the stuff that you’ve seen in the show so far?

ALBERT YEAP: I would say it is interesting enough from a scientific standpoint. For an educated person but not one working on AI – say, a physicist or a chemist – it should appear scientifically sound. For those working in AI, we see some major flaws but we’ll come to that in the other questions, I think.

For sure. As an expert, as someone practitioning in this field, do the hosts, the robots, in ‘Westworld’ meet your criteria for artificial intelligence so far?

Yes, they’re very good [chuckles]. The hosts are good. It’s the humans that are not good [laughs].

I think we’ll be seeing plenty more on that topic, in that vein. What are the key criteria that you’re looking for to assess artificial intelligence in ‘Westworld’?

For AI in Westworld, the key criterion would be performance – the Turing Test. I think the most important thing for a lot of people would be how closely it gets to being a real person. In this sense, the hosts are able to react very well with the guests and that’s why they are great, from an AI standpoint. However, I did not get how the guests know who are the robots and who are the other guests. I might have missed it. Still, the hosts behaved really well and the movie maker throws in one of Asimov’s classic laws, “robots must not harm humans”.

If it was you in that environment, will there be specific things that you’re looking for to tell the difference between a human and a robot?

I think if you simply put me, or anyone else, in Westworld and not really know which is which beforehand, then I don’t think we can tell. That’s why I say that the hosts are very good. No way we can tell.

Have you made any sort of observations about the abilities, functions, or limitations of those robots so far?

Yes. That’s why I say excellent performance of the hosts, but the story side for the humans is weak. Now what I meant by that is that they try to control the hosts and analyze their behaviour by talking to them. Right? Now if they have got a host that good, then this would not be possible. You wouldn’t be able to check the performance by analysing it this way.

Is it for reasons of deception? Is that the main reason?

No. Just because it’s just too complex. The programs that run on the hosts would be so complex that you simply cannot ask the host, “Oh why do you do that?” and expect they will give you a reason that you could comprehend. The reasoning will often be very complex and they don’t follow exact rules. So you can’t. To think that some programmers could add some subtleties to make it behave dangerously or control/guide their evolution to become more human (and eventually become humans) is to portray us as some kind of a super human controlling/creating these robots. Once you can create Westworld, it’s almost beyond our reach to analyze them, really.

When you’re programming a creation as complex as the ones in ‘Westworld’, does the creator accidentally put some human qualities into those subjects, do you think?

What you could say is that unexpected behaviours could emerge from your program. This is particularly true if you allow it to learn new information and learn how to react to different situations. And here lies the danger. The hosts could learn and play out many different behaviours without the humans realising it. They can do so at great speed and how they choose to act could become unpredictable.

If it’s the case in ‘Westworld’ that an evolutionary process has been deliberately set in motion, would that make you feel uncomfortable?

Before I answer this question, it is important to understand what is meant by setting up “an evolutionary process in motion”. A simple answer would be allowing the hosts to acquire (unlimited) information on their own and change their behaviour as they see fit. In this sense, what has evolved is the behaviour. The underlying process is basically the same. The robot is doing what we call symbol to symbol manipulations. If this is the case, then I would feel really uncomfortable. If they take control of us, we are then controlled by nothing but a machine. Here is where I feel the show’s portrayal of this process is the weakest from a scientific standpoint. They show the hosts slowly discovering who they are and the humans trying to repair them. To me, this won’t be the case. If they do, it will be immediate or they will exhibit “crazy” behaviour and get “killed off” as individuals. I think that stray host that uses a rock to destroy its head could be the one that got confused. I liked that part.

It seems in the show that one or more of the human scientists are hoping to bring about a higher level of consciousness within the hosts. Should we be concerned if humans try to do that?

Again, when you say that they are trying to do that, really they’re not. What they are doing is to allow them to “evolve” to generate more interesting or unexpected behaviour. As I said earlier, if they do it this way, then they won’t be able to control them much longer. This is dangerous and bad. As I said earlier, this is the machine taking over. While they exhibit complex behaviour, they are nonetheless machines. Sadly, most movies portray this aspect of AI and make AI seem evil. Another possibility, and a more complex one, is to allow the robot to continue with our evolutionary process. In this way, we create a jump across species, in fact, from biological to machines. But we need first to discover who we are and how we have evolved from other species to what we are.

With that in mind, do you have any guesses or theories about where some of the storylines might be going from here?

Most probably the robots will start learning to be “conscious” and then they will possibly strive to get control or create a complex interplay between hosts and humans.

Which you see as an inevitability with artificial intelligence whenever it reaches a certain capability, correct?

Yes, but as I said before, they are still just machines albeit with behaviours as complex as humans.

So what’s the best approach for us to take with artificial intelligence? Should we, on the one hand, not develop it beyond a certain level? Or do we continue pursuing artificial intelligence that’s highly constrained with no access beyond the lab environment?

For me, the best approach is to use AI technology to help us unravel the mystery of the mind as far as possible. If we continue to use AI technology to create machines with complex behaviour, they will destroy us, as Professor Hawking predicted. A game like Westworld is dangerous.

When it comes to interacting with the robot that either has its own artificial intelligence, or is being controlled by a remote artificial intelligence, do you think there are particular risks to human life in a situation like that? And how would you try and control those risks?

Again, it depends on to what extent you let loose the robot. If you control their learning, then that would be fine. It’s just that if they are allowed to learn by themselves, that’s where trouble begins.

And in the case of the show, it seems one of the programming strategies is to let each machine be quite autonomous and just control itself during the day within a narrow framework. How do you feel about this, as a means of letting the machine run itself but putting limitations on it? I feel as a layman, a little bit uncomfortable.

It is OK if you have a fixed set of behaviour that they could choose to act out. If we limit that, then you are safe but it won’t be that interesting. Well, in the real world, some companies would, for profits, let them loose and generate more interesting behaviour. Then we would have big problems on hand.

One of the dangerous things I thought about while watching the show is the effect on human beings from treating the robots like human beings. So, if you are murdering and raping robots, do you think that may change how we relate to each other as human beings?

Oh yes, yes. Because they are so real, it will affect our relationship with, or feelings for, the robots. We’ll increasingly think of them as humans – because, as I say, at the host level you can’t tell the difference. Oh, you were asking about how humans relate to each other, that I don’t know.

If you have antisocial urges or behaviours, is it a good idea to take that out on artificial intelligence? Should it be encouraged?

I don’t think I’m qualified to answer that question, but if you ask me to guess, I would say “no”!

Well, let’s put it in another way. Let’s say that you’re an engineer had worked on developing these robots, would you like the way that you’re seeing them being treated?

It depends on what you created the robots for, I think.

Are there any other particular things you’d like to discuss about the show?

Yeah. I keep thinking how the hosts could have original intent/consciousness. What Westworld is showing is how the hosts suddenly become “aware” but could there really be some “special moments” that make us realise who are? How is that different from processing other situations and generating some responses?

How do you think that change would come about in reality?

That is a hard one because that is exactly the key, the holy grail, for AI – to understand how it comes about. How do we actually have original intentionality – or what religious people refer to as the soul of the human beings. As I said before, I believe in using AI technology to unravel how the mind works. Perhaps then we will know the truth.

Would it come as a surprise to you to see that level of technology being used an for amusement park rather than something more scientific?

No, not at all. Not at all. No. In fact, I have a question for you, I would ask for the address for Westworld.

We both want to go there as soon as possible then, huh?

You want to go there as well?

Yeah, of course. You wouldn’t feel uncomfortable? You’d feel 100% safe?

Yeah, of course.

And, of course, you’d spend your time on intellectual pursuits, wouldn’t you?

Definitely. Of course, I’d be observing them. I’d try to find the maze [laughter].

This lovely piece of content is brought to you by NEON, the only subscription video on demand service that lets you be as demanding as you like (provided you pay the bill).

You can stream Westworld right here, right now, on NEON – if you’re not on NZ’s best streaming service already, click here to start a 30 day trial.