“We cannot stop it from becoming bad” – an A.I. expert talks Westworld

A conversation with a Professor of Artificial Intelligence about ‘Westworld’, season two of which streams on NEON from April 23.

The long wait for the second season of Westworld is almost over, with new episodes streaming weekly on NEON from April 23. Partway through the first season, we discussed the show with AUT Professor Wai (Albert) Yeap. He’s the Director of the Centre for AI Research at AUT, so we didn’t want to miss the chance to follow up our conversation with this expert in the field. Check out our chat about the science of artifical intelligence and how Westworld stacks up against current understanding.

FLICKS: When we’ve spoken in the past about AI, there’s been a lot of discussion about the theoretical dangers. Many of the things that we’ve seen in the second half of the season are by design, manipulated by humans. So let me pose the question: should we only trust artificial intelligence as much as we trust a human?

Ooh. That, that is a hard question. Well, you know, it’s hard to say whether we can trust humans. Humans themselves – now there’s the problem. Nowadays, for example, the researchers are discussing ethics and letting the A.I. learn ethics. Now, the trouble with that idea is that they could learn to be bad and like it. I mean, we have lots of examples in our own society where we know that it’s wrong to do this, and yet we still do it.

So letting them learn is no guarantee. Back to your question about whether we can trust humans. Of course, you can’t trust humans. I mean, there will always be someone with their own ulterior motives. I mean, they have their own ideology, they have their own thing that they want to discover, and so they can change according to their liking. So once you allow that, it’s hard.

Where we will be for the foreseeable future is that there will still be a human behind the process of designing an artificial intelligence, correct?

Yes. But it doesn’t mean we can control them. We may be the one designing it, that doesn’t mean we can control it.

I don’t think it came as a huge surprise to viewers of ‘Westworld’ that the show ended up having some major characters that didn’t realize they are artificial intelligences. How sophisticated, from a scientific point of view, would technology have to be to hide the nature of an artificial intelligence from itself?

That is quite easy because they would never know what they are themselves anyway.

They’re always in the loop. So, if we are able to train them to an extent where they start asking questions, then they would just be humans. We are just in the loop. We don’t know who we are, why we are here, where we are going. And I think a lot of us ask these questions for their whole life.

Do you think that an artificial intelligence would have existential questions?

I’m thinking about the fact that, at the moment, the technology simply goes from one symbol to the other. They ask that and try to relate those symbols. Whether they will reach a point where they start to ask extra meanings of those symbols that are not in the system or they cannot find in the world, then would that become an existential question?

Whether that’s a capability of the type of logic that they think with? Is that what you mean?

Yes. The way they get the meaning is they will look up the dictionary and say, “Okay. The meaning of flower…”. With something that’s an object, they refer to an image which is a flower. Then, if they know how to perform actions on the flowers, then they will remember those actions as performed on flowers. Now, once they start to ask about what’s the meaning of life, there’s no such reference because they’d be then like us and think in the abstract.

If they start asking questions that are existential – whether they exist, or whether they should be doing this, or whether they should be doing that… Then, all hell breaks loose, so to speak.

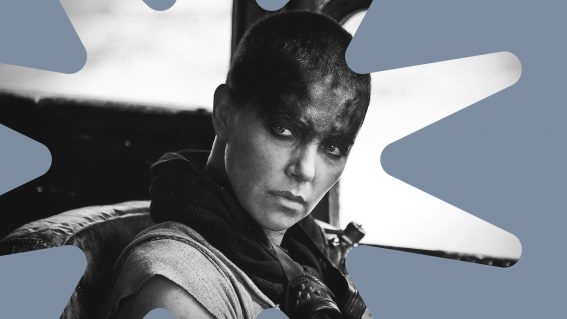

We see a little bit of that curiosity about how the A.I. will behave in scenes with Dolores being questioned. A scientific curiosity in how she’s processing things.

Yeah. But here’s where I think it breaks down again from the scientific standpoint, because once they are able to start asking that question, then they are more powerful than what they showed the host at the beginning of the show. The beginning of the show is like a make believe, I guess. So that’s easy. The host just follows the actions they are programmed to, or they search and find meanings to one where it led for others or to improvise an answer. But they don’t know the meaning of the answer or what it means. It’s for the humans to interpret that and they’re happy with that as a game. But once Dolores is able to start asking those questions, there is completely a different platform. She would be more powerful than she is in the show. And I think that’s where they failed on that.

You think that she would emerge as a stronger entity from that process?

I have to say because, because they are not programmed that way, therefore, they shouldn’t be able to do it that way. Now, why they are able to do it that way, they didn’t explain. So how Dolores is able to evolve to that stage is left in the dark. They shouldn’t be able to.

My reading of the first season was that scientists had wanted to develop a true artificial intelligence and that they kept coming up against human limitations – making something within the scope of human design was always just coming up short of that. Was that your interpretation as well?

Mm, no. No.

How did you read what Anthony Hopkins’ character was trying to achieve with the events he ended up setting in motion in the last episode?

Yes, he’s tried to do that. But it cannot be done in a setting like Westworld. So we need to know more about his research into the problem. Now, from the show, it appears that he created this world and let the hosts learn. But at the same time, we have been shown in many episodes that they can’t really learn. They have the scripts given, and then their memory wiped out. They are controlled.

Now, if they are controlled by all this mechanism, then they cannot come out of the loop. They cannot learn. And then Hopkins performance of the experiments will fail. They would never get out. So he has to do something else. He has to experiment with the robots. There’s the thing. He did it. Like I think I mentioned last time, a lot of movies depict robots suddenly waking up to the fact that they are, kind of, “conscious.” But they can’t do that. Not with the current technology. It has to be something yet to do.

Going back to trusting A.I., how dangerous do you think the human ego is, in terms of what people may want to create in this sphere?

Oh, well, human ego is always a dangerous mix. Yes. Very dangerous. It’s just like the bomb, creating of the bomb or chemical weapons and all those things. They could always be used in a very bad way. But it’s the science that is important. That’s the other side of the coin – if we can master the science and understand that, then we can progress as a society. That’s what I am trying to push hard on in terms of AI. A lot of researchers now argue about ethics, try to put ethics into the A.I., try to put control into A.I. But I pointed out in a recent paper that with all these things if you make a more, what they call, a friendly A.I., then you’re just simply making the A.I. more, and more, and more powerful. And it doesn’t stop no matter how many controls you put in and how many ethics we train. We cannot stop it from becoming bad.

The thing is that researchers are focusing on improving A.I., doesn’t matter whether it’s good or bad. And I think that is bad. We all think A.I. is just a tool. But A.I. is not just a tool. A.I. is more powerful. A.I. is a platform where I think we can discover ourselves, and we can know what we are and who we are. Then we can move forward to the future society. If we don’t do that, then we are in danger of simply just destroying our society by machines. That is my conclusion.

Do we have to always be very vigilant about making sure they live in environments that can be controlled?

No. My answer is you can’t control. No matter how vigilant you are, you will lose to the robot. Full stop.

What’s the solution to that problem?

Look, the solution to that problem is that we must take this opportunity before the machines become too powerful to use A.I. as a platform to understand who we are. You see, once you understand the processes that nature gives us, then we can program those processes as part of the robot and then we kind of transform ourselves from flesh and bones to digital form. Then there’s no difference whether it’s the robot or the humans. They are the same thing. See, as I said, at the moment, they are not the same thing. The robots that we know now in 2018 are just machines because they are made by artificial processes, processes that man made. But once their process is the same as our process, then they are no different.

I think there’s a hint that Hopkins’ character’s consciousness may exist within the digital environment after the season finale. Maybe you guys aren’t so different after all…?

Well, at, at a certain level, yes. We are not that different [laughs] at a certain level. As I said, I do not know his scientific approach to doing that. And I’m not convinced that setting up a Westworld, the host and the human mixed together, would allow the robot themselves to become conscious. Okay? That to me is not quite possible. But on the other hand, that is TV. So, they stretched their limit. We are the same in the sense that we are trying to achieve the same thing. In the loose sense he’s trying to get consciousness to the robot, and I’m not trying to get the consciousness to the robot. I’m trying to understand consciousness using robots. Okay? And once I understand how it works, then if I create the robot with consciousness, then my claim is there’s no difference to a human being born with consciousness.

If you had the ability to make robots as you see in ‘Westworld’, what would be the most interesting thing to observe from a scientific point of view?

I would create a robot in the Westworld with the hope of understanding my scientific passions. It’s quite simple, but very hard to make. I need to make a machine, okay, that I do not programme. It would need to be able to understand the world on its own just like the infant. You see, the infant is born, all right? Nobody teaches infants what they learn in their first year. An infant learns on its own. So I need to capture the algorithm and put it in the robot, and now my version of Westworld experiments begins. That robot will start to learn, interact with humans, learn the language, start to say maybe “mama, papa”, and then start to be going to school and learn. Now that, that would tell me what constitutes consciousness.

There’s not really a robot that can do all these kind of things in the Westworld, and then suddenly turn to consciousness. That’s what all the other movies all show. Ex Machina, and all these, they have a complete robot, and then they start learning. That’s too late. We think intelligent algorithm, but maybe we don’t realise it’s a crap algorithm, actually, compared to nature and making them look powerful. They’re actually not as powerful. Nature’s algorithm is amazing. It’s amazing and we need to study that. Very few AI researchers are studying that. That’s the problem.

This lovely piece of content is brought to you by NEON, where Westworld Season 2 streams from April 23. If you’re not on NZ’s best streaming service already, click here to sign up to their TV & Movies package now.

Want to get ahead on the events of Season 2? The showrunners have taken the radical step of releasing a primer to roll out all of the key plot points in an effort to stop spoilers. Watch, but only if you dare: